Introduction

This Collection Selection Box showcases examples of artists and designers exploring the ideas, processes, applications, and implications of artificial intelligence (AI). AI is an umbrella term that describes a set of technologies intended to simulate activities that are otherwise thought to be human. These include, but are not limited to, learning, problem-solving, decision-making, visual perception and creativity. AI originated as a research field in the 1950s, shaped by cognitive psychology, mathematics, engineering, computing and cybernetics, which refers to the study of feedback and control in living and machine systems. It also developed from broader interests in automation and mechanisation.

From the outset, AI researchers have pursued various approaches to crafting intelligent machines. One early approach, known as symbolic AI, sought to translate knowledge and intelligent behaviours into explicit symbols and logical rules in the form of algorithms, which were directly programmed to create “expert systems”. The second approach favoured learning-based methods, which relied on patterns learned from data and used early forms of neural networks. Symbolic AI excels at specific tasks that require clear, logical reasoning and transparent decision-making, but it can be limited. By contrast, learning-based approaches excel in handling complex, unstructured data such as images. However, decision-making processes can be unclear to humans. Learning-based approaches remained largely theoretical until the turn of the millennium, which saw advances in powerful computer hardware and the availability of large datasets from the growing World Wide Web.

In the early 21st century, AI development was dominated by machine learning and deep learning approaches. Machine learning is a subset of AI that utilises algorithms to detect patterns in training datasets and make predictions based on that data. Deep learning is a more complex subset of machine learning that uses artificial neural networks inspired by the structure and function of the human brain. These systems are trained on vast datasets, often scraped from the internet, and decision-making processes are opaque. This use of data and opaque decision-making introduces concerns about the potential for AI systems to inherit and exacerbate human biases in datasets, as well as provide inaccurate responses. Other concerns include data privacy, consent, and the impact of AI automation on work and human labour. Many contemporary artists working with AI engage with these issues, often using machine learning tools directly to critically explore the cultural, political, and aesthetic dimensions of AI technologies in contemporary life.

This Collection Selection box showcases how artists and designers have engaged with AI developments as creative tools and collaborators, as well as sources of inspiration and subjects of critical inquiry, from the 1970s to the 2020s. Moving through early ideas and applications of symbolic AI to machine learning and deep learning applications, including generative AI, and incorporating related inquiries in artificial life and robotics, the works in this box illustrate just how expansive the creative responses to AI developments have been and continue to be.

The box contains just a small selection of related works in the collection. It also introduces some additional works, including films, that could not fit in the box. We have many more works that you can view online or request to see in the Print Room on your next visit.

View Explore the Collections for more works related to artificial intelligence.

Neighbourhood Count, Paul Brown, 1991

Paul Brown (born 1946) is a British artist specialising in computational and generative art since the 1970s. After attending the 1968 art and technology exhibition, Cybernetic Serendipity, he realised that, with computers, he could realise his systems-based approach to artmaking. In 1970, Brown encountered John Conway’s computer program “Game of Life”, which continued to inspire him throughout his career. Conway’s Game of Life is a zero-player game, played out on a grid of black and white cells, where the initial state determines its evolution and complex behaviours. The game raises questions about whether complex intelligence can emerge from simple programmed rules.

Conway’s Game of Life is a class of mathematical phenomena called Cellular Automata (CA), originally proposed by mathematician and computer scientist John von Neumann in the 1940s. CAs informed early AI research and were used to model complex systems, including neural networks and decentralised decision-making. With CAs, Brown was compelled by how a few simple rules could generate complex outcomes and sought to develop geometric interpretations of CA operations.

In 1977, Brown began studying at the Slade School of Art’s Experimental Computing department, where he met other key computer artists, including Ernest Edmonds, John Lansdown and Harold Cohen. There, he began to work on generative systems that would later be understood as Artificial Life: the programmed simulation of fundamental processes and characteristics of living organisms and systems, informed by biology and complexity theory, and with applications in bottom-up robotics.

Brown’s 1991 work, Neighbourhood Count, is part of a series begun during this period. The work consists of 17x17 matrices with eight cells. Following Conway’s Game of Life, each cell begins in one of two states (live or dead), and every cell interacts with its neighbouring cells. The states of each cell simultaneously influence the consequent state of their neighbours, for example, any live cell with fewer than two live neighbours dies from “underpopulation”. The resulting piece depicts the 256 possible neighbourhood states. This aesthetic interpretation of CA operations demonstrates how artists extended the reach of AI-related concepts far beyond their original use contexts, bringing them to new audiences through artworks.

Drawing, Harold Cohen, 1973

Harold Cohen (1928 – 2016) was a British-born abstract painter and textile designer known for creating the computer programme AARON, widely considered the first AI artmaking programme. Cohen was introduced to computers in the late 1960s while teaching at a university in California and immediately saw an opportunity to pursue questions about the nature of artmaking and why we draw. He wanted to explore whether his artistic preoccupations, decision-making and intentions could be translated into code and executed by a machine, and whether a computer might possess more creative autonomy than other tools, such as cameras, in this context.

By 1971, Cohen had presented his ideas and initial prototype painting system at a computing conference. In 1973, he was invited to Stanford University’s Artificial Intelligence Laboratory, where he developed AARON. Initially simple, Cohen defined rules for drawing, combined with random variables, that directed AARON’s drawing. AARON exemplifies the early approach to AI systems, being pre-programmed with rules of intelligent behaviour. AARON could not learn new techniques independently but was continually refined by Cohen throughout his career. Despite this, Cohen did not see AARON purely as a tool or an instrument. Instead, AARON was a functionally independent entity capable of generating an endless succession of different drawings.

Cohen’s work with AARON took AI development beyond its conventional computer science applications in the 1970s and 80s. He used AI in novel ways to pose more philosophical questions about creativity, perception, intention and collaboration that AI researchers and computer scientists were not otherwise asking. While AI was largely confined to academic research and industrial problem-solving at the time, Cohen brought it into creative and cultural domains, introducing AI to new audiences through gallery exhibitions. The questions and debates his work sparked about computational creativity, collaboration and artistic agency have continued among artistic communities ever since.

In its early phases, images created by Cohen’s AARON software were drawn on paper by a small floor-based robot equipped with a marker pen, which executed AARON’s instructions as line drawings on paper or canvas. Other versions employed a robotic drawing arm, while Cohen later favoured screen-based renderings, projections and digital prints, following the wider adoption of digital display technologies. AARON’s drawing style also evolved; initially, AARON produced monochromatic abstract line drawings, which Cohen often hand-coloured. Later versions of AARON drew more recognisable forms, including foliage and human figures, and could autonomously select colour for the images. This drawing from 1973 demonstrates AARON’s simple, more abstract line drawings that were hand-coloured by Cohen as a collaborative act.

20:28, Harold Cohen, 1985

This 1985 plotter drawing, 20:28, is not coloured by AARON or Cohen, but demonstrates AARON’s evolution toward more clearly recognisable human figures. The drawing depicts three figures – one male, two female – with three balls. The male figure is sitting on the ball, watching the females as they dance. The work is uniquely signed by AARON, with the date and timestamp of its creation: 20:28 on the 16th of August 1985 (written here as 8-16-85).

Portrait, Patrick Tresset, 2011

Patrick Tresset (born 1967) is a French-born artist and researcher living and working in Belgium. Originally working as a painter and draftsman, Tresset belongs to a generation of artists who emerged from the computer department of Goldsmiths College in London. He has published research and produced artworks that explore artificial intelligence, aesthetics, and social robotics.

Tresset is best known for his performative, theatrical installations with robot drawing machines named “Paul”. Equipped with a robotic arm holding a pen, Paul produces observational drawings of sitters. This 2011 portrait depicts Douglas Dodds, former Senior Curator at the V&A.

Paul evolves from technologies developed in the collaborative project, “AIkon-II”, based at Goldsmiths, University of London, where Tresset and Frederic Fol Leymarie explored drawing through computational modelling and robotics. With Paul, Tresset aimed to translate his artistic expression and the actions of a drawer into computational systems that directed Paul’s actions. When drawing the human sitter, Paul exhibited attention and purpose. The aim was to create drawings with the quality and emotional resonance of human art, while keeping the drawing process engaging for both sitter and audience. During performances, Paul often paused to “study” the participant's face, simulating contemplation before resuming the work. The robotic arm would also intentionally squeak to evoke effort and the challenges of creative work, in an attempt to foster a connection between the sitter and Paul.

While not an implementation of AI technologies directly, the work showcases the wide range of artists working with ideas of autonomous machine intelligence, questions of computational creativity, kinship and our tendency to attribute lifelike qualities and our own human traits to robots and other mechanical devices.

Terram in Aspectu, Liliana Farber, 2019

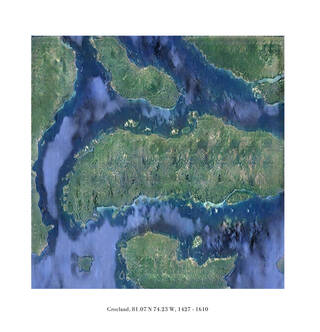

Liliana Farber (born 1983) is a Uruguayan-born, New York-based visual artist whose work explores notions of land imaginaries, unmappable spaces, utopias, truth, geopolitics, and techno-colonialism in the age of algorithms. Her research-based art practice spans still and moving images, installations and web-based works.

Farber has long been interested in how history, photography and geography are intertwined in the space between our online and offline worlds. The raw materials for her practice include antique maps, satellite imagery, geolocation points, and timestamps, and she uses these to reflect on the human experience of living in the age of big data and within global-scale infrastructures. Her 2019 series, Terram in Aspectu, features satellite images of remote islands that appear to be screenshots from Google Earth. Upon closer inspection, the images reveal themselves to be fictional; they are, in fact, representations of non-existent islands, originally referenced on historical maps. Produced by feeding information from historical sources into an open-source machine learning algorithm trained on Google Earth imagery, these machine-generated images present alternate versions of our world as understood through a machine lens: a world that sits between fact and fiction.

The work prompts us to consider the consequences of machine learning systems and other technologies that shape and influence our reality. With the work, Farber draws parallels between the colonial endeavour to map the world and contemporary Big Tech reproductions of colonial global power. It also draws on forms of mythology to question how veracity (the truthfulness of images and data) and trust are determined through existing and new institutions of knowledge in an algorithmic and post-truth age. At the same time, with their ambiguity and illegibility, the images offer up the possibility of being lost, disconnected, or undiscovered in a hyper-connected world.

Anatomy of an AI System, Kate Crawford and Vladan Joler, 2018

The project, Anatomy of an AI System, was developed and published online in 2018 by Kate Crawford (born 1974), Vladan Joler (born 1977), and teams of technology and social sciences researchers. Crawford investigates the sociopolitical implications of artificial intelligence (AI) and co-founded the AI Now Institute in New York. Joler is an artist, new media professor, and director of SHARE Lab, which investigates algorithmic transparency, digital labour and other intersections of technology and society. Crawford and Joler’s research project and resultant visual essay unearth the hidden technical and human infrastructure behind AI systems—specifically, the Amazon Echo smart home device, first released in 2014, and the connected cloud-based voice assistant, Alexa.

The publication serves as a contemporary record of previously obscured aspects of our digitally connected world. It begins with a simple interaction with Alexa via the Echo device, then journeys through the vast matrix of material, human and technical processes, including material resource extraction and energy consumption, human labour, and vast quantities of data that underpin our everyday human-machine exchanges. In telling this story, the project challenges the idea of immaterial, disembodied and purely “artificial” intelligence. It also highlights contact points between governments, corporations, and Alexa users, contributing to debates over privacy and the role of digital product design in normalising surveillance technologies in our homes.

This publication is a printed version of the online website content, adapted for further dissemination at exhibitions and events. The V&A also holds a printed copy of the diagram, a PDF version of this publication, and an archived version of the website. The whole project can also be found online at: https://anatomyof.ai.

The Economist magazine, 11 – 17 June 2022

In 2022, generative AI applications such as text-to-image models Dall-E and Midjourney, and the chatbot ChatGPT, were first unveiled to the public. Breakthroughs in the development of foundation models, which are large-scale, general-purpose AI systems, also known as transformer models, underpinned developments in large language models and diffusion models. These enabled sophisticated, general-purpose AI systems with widespread public appeal. For the first time, applications including ChatGPT and Dall-E offered free, instant, and seemingly limitless generation of text and images from a simple text prompt, requiring little technical or creative expertise.

These new applications marked a shift in consumer access to AI systems. The rapid popularity of this new generation of freely available, user-friendly AI applications sparked ongoing debates over copyright, environmental impacts, AI safety, machine consciousness, and existential risk. These debates bolstered the perceived power of these developments and brought AI development to the forefront of public, media, and political attention.

This edition of The Economist magazine was released in June 2022, just on the cusp of the generative AI explosion. The cover image was generated using an early version of Midjourney, created with the prompt “artificial intelligence’s new frontier” and a collage-style feature. The unusual image of a humanoid robot announces a new era of AI and introduces several articles on the promises, potential, and risks of advanced, general-purpose AI. The articles inside capture prescient debates on creativity, copyright, authorship, human labour, and the future of work, particularly creative work, that were ubiquitous following the popular adoption of these tools.

Soon after the release of generative AI tools, their underlying technologies were increasingly integrated into existing creative applications and consumer products, and AI-generated images and text became increasingly indiscernible. The magazine issue, therefore, importantly captures the initial cultural and technological disruption of generative AI as it unfolds, and when it is most visible and tangible.

The Economist is a newspaper published online and in a weekly printed magazine form, distributed in various regions worldwide. It was founded in 1843 and concentrates on current affairs, economics, and drivers of change in technology and geopolitics. This AI cover featured on the US and Asia editions.

For further viewing

MEMORY, Sougwen Chung, 2017 – 22

Sougwen Chung is a Chinese-Canadian artist, programmer, and researcher who works at the forefront of AI, machine learning, and advanced robotics. Since 2015, Chung has developed a series of robot drawing collaborators called D.O.U.G (Drawing Operations Unit Generation_X). MEMORY involves Chung’s second generation, called D.O.U.G_2, with each generation investigating the line between machines and human creativity, and the tensions between kinship and otherness in our relationship with machines.

In the era of big data and AI, MEMORY explored how AI systems might influence future memory. Chung used a machine learning algorithm called Recurrent Neural Networks (RNN) to explore computational memory, mediation, and collective and personal histories in big datasets. RNNs are characterised by their ability to “remember”, so rather than processing inputs discretely, the RNN’s outputs are shaped by all past data inputs. Chung trained the RNN on two decades of their past artworks, which were archived, digitised and categorised for this purpose. Through this process, the RNN “learned” Chung’s artistic style, which is reinterpreted by D.O.U.G_2, who drew simultaneously with Chung. This is not an exercise in drawing automation but collaboration. It intentionally expands the “artist’s hand” and reflects Chung’s preoccupation with the machinic interpretation of human gesture, movement and intention.

Chung’s later work has evolved through generations of D.O.U.G., exploring other types of data input, ranging from city-scale movement to the body’s electrical signals. However, D.O.U.G._2 remains a lifelong project for Chung, who has since fed it with new drawings, many of which are already the results of collaborations. Chung’s drawing performances, including MEMORY, take AI beyond the screen and strictly digital outputs. The V&A has acquired the resulting collaborative drawing, a version of the RNN model, and a performance video documenting the creative process.

The Butcher’s Son, Mario Klingemann, 2017

Mario Klingemann (born 1970) is a German artist who works with machine learning, code, and algorithms. He is interested in generative and evolutionary art, data visualisation, and robotics. Since learning programming in the early 1980s, he has explored human creativity, questioned the capacities of advanced technologies to emulate and augment these processes, and sought to understand and subvert the inner workings of opaque systems.

Klingemann’s 2017 work, The Butcher's Son, was created using a Generative Adversarial Network (GAN) trained on stick figures and images of the human body from the web. The piece is part of his Imposture Series, which sought to transform stick figures into figurative “paintings” to explore posture and the machinic depiction of the human body.

A GAN is a machine learning model that generates new data, such as an image, that resembles a given dataset. Developed in 2014, it consists of two neural networks: a generator, which creates new images from random noise, and a discriminator, which evaluates the similarity of the new images to the training dataset. Unlike earlier forms of programmed AI, GANs “learn” by analysing many images to recognise patterns. As both networks improve through the process, it continues until the generator has produced an image almost indistinguishable from the dataset. The distinct aesthetic results of this process—the visible AI “artefacts” and the surreal, occasionally grotesque and non-human forms generated by the machine—are a crucial consequence for Klingemann, as the complexity of the human figure challenges and reveals the limits of AI capabilities in the 2010s.

Selected photographs from 'Myriad (Tulips)’, Anna Ridler, 2019

Anna Ridler (born 1985) is an artist and researcher who lives and works in London. She works with machine learning technologies, including Generative Adversarial Networks (GANs), to better understand these technologies and to interrogate systems of knowledge embedded within and produced by them. Her work draws attention to the human behind automation and artificial intelligence technologies.

Much of Ridler’s recent work centres on ideas of speculation and draws on the history of the “Tulip Mania” that swept the Netherlands and much of Europe in the 1630s, when the price of tulips soared before dramatically crashing. In 2019, while based in the Netherlands, she produced and classified 10,000 photographs of tulips over a 3-month seasonal span. Photographed and manually categorised by Ridler during this period, this dataset forms the work Myriad.

The resulting dataset is displayed as tiled photographic prints with hand-written annotations, which brings the digital dataset back into the physical realm. Its size and detail remind us of the human labour, time, and resources required to create, classify, and maintain the huge datasets that underpin everyday machine-learning technologies. The handwritten metadata tags expose the difficulties of classifying even “simple” flowers into discrete categories, such as colours, encouraging us to reflect on the subjectivity and embedded values in both human and machine classification.

Sorting Song, Simone C Niquille, 2021

Simone C Niquille (born 1987) is a Swiss designer and researcher based in Amsterdam. Through her practice, technoflesh Studio, she produces films and writing that investigate computer vision technologies, the images they create, and the worlds they construct through classification.

Niquille’s 2021 film Sorting Song is a computer-generated film that explores how everyday objects become machine-readable for computer vision technologies. It explores classification processes, asking how we know when a chair is a chair. To produce the film, Niquille sourced a series of objects from the SceneNet RGB-D indoor training dataset: a large-scale library of 3D models, floor plans, and domestic objects compiled in 2017 by the Dyson Robotics Lab at Imperial College London to develop machine vision capabilities for domestic robots. Using these objects and their machinic classifications, the film features a conversation between two young voices that question the nature of things, drawing on the nursery rhyme in which children learn to name objects and distinguish them from one another.

Sorting Song shows us where our designed world has become machine-readable. It encourages us to reflect on object classification removed from the nuances provided by human context: When is a vase a bowl? Is a toilet a chair? Niquille advocates against machine learning as a tool to encode assumptions and reduce reality. Sorting Song playfully draws attention to how embedded assumptions in software can lead to potential mistakes in interpretation. It asks us to consider how these reductive and mistaken interpretations will shape our future reality in which robots may start to cohabit with humans in everyday life.