AI has since evolved and is increasingly shaping how we create, communicate, and make decisions, becoming embedded in everyday experiences and fields, such as healthcare, logistics, finance and policing. While the technology is driving innovation and offering numerous benefits, its pervasive reach also raises urgent ethical and societal questions around privacy, bias, environmental impact, and the potential for job displacement.

Here we present 10 objects from the V&A collection that help tell the story of AI’s development.

Automation and mechanical men in the early 20th century

In the early 20th century, decades before AI research began, robots and mechanisation were popular themes in the cultural imagination, extending a much longer history of automata. The term “robot” originated in Karel Čapek's 1920 popular dystopian science fiction play, R.U.R. (Rossum's Universal Robots), in which robots are created to serve humans but eventually come to dominate them. Derived from the Czech “robota”, meaning “forced work”, the term “robot” soon replaced “automaton” and “android” in popular use. In 1928, the UK’s first working robot, Eric, was unveiled. From then on, the idea of machine intelligence, where “mechanical men” symbolised an uncertain future, became a popular science fiction theme in literature and films, including Fritz Lang’s 1927 film, Metropolis.

Edward McKnight Kauffer, advertising designs for Shell Mex and BP (1930s)

Mechanical men also appeared in advertising and corporate branding, including Shell-BP's brand mascot designed by American graphic designer Edward McKnight Kauffer. Shell-BP’s mechanical man mascot reflects the technological optimism of the interwar years and a cultural fascination with mechanisation. By employing the future-facing imagery of robots in the 1920s – 30s, Kauffer presents Shell-BP’s products as scientifically advanced and leading technological progress.

20th century AI developments and artistic inquiries

With robots alive in the cultural imagination, the 1940s saw theoretical and practical developments in computing. Then, in 1950, British computer scientist Alan Turing asked: “Can machines think?”. With the Turing Test, he explored the idea that if you can't tell something’s not a person, then it can be said to possess human intelligence.

The AI research field was officially established in 1956 at the Dartmouth Workshop, held at Dartmouth College in New Hampshire, United States. The 8-week workshop brought together key minds from across the UK and US working in cognitive and computer science, mathematics and engineering, and was premised on the idea that any aspect of learning could be precisely described and, therefore, simulated by machines.

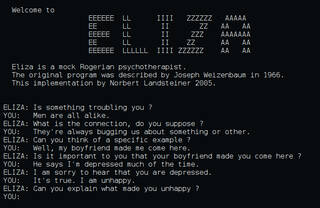

Following the Dartmouth Workshop, AI quickly became a mainstream idea in research communities, and its development thrived during the late 1950s and 1960s. At first, researchers pursued parallel approaches to creating intelligent machines. One approach, known as Symbolic AI, sought to translate knowledge and intelligent behaviours into symbols and rules in the form of algorithms (a set of instructions to complete a task). These were directly programmed to create “expert systems”. The second approach favoured learning-based methods, which relied on data patterns and used methods such as early forms of neural networks. This approach, which aimed at more flexible cognitive processes, enabling machines to improve through experience with less human direction, would remain largely theoretical until the turn of the 21st century. Symbolic AI emphasised logic and human reasoning, and underpinned key projects such as the first mock therapist chatbot ELIZA in 1967. Around the same time, artists also began exploring the creative potential of computers and programmed intelligence.

Harold Cohen and AARON (1970s)

Harold Cohen was a designer and abstract painter who, after discovering computers in the late 1960s, saw the chance to pursue his ongoing questions about how and why we draw. With computers, Cohen sought to explore whether his drawing processes, artistic decision-making and intention could meaningfully be translated into computer code and actioned by a machine. After presenting his ideas at a 1971 computing conference, Cohen was invited to Stanford University’s AI Laboratory in 1973, where he developed the computer programme, AARON. Programmed by Cohen with a set of rules incorporating random variables, AARON is widely considered to be the first AI artmaking programme. AARON’s early drawings were produced by a small robot equipped with a marker pen, and later by a plotter machine, with the resulting abstract line drawings initially hand-coloured by Cohen.

AARON couldn’t independently learn new techniques but was continually refined by Cohen, eventually evolving from producing monochromatic abstract shapes to figurative drawings and selecting colours autonomously. However, Cohen never saw AARON purely as a tool. Instead, he used AI to ask more philosophical questions about creativity and collaboration. While AI was largely confined to academic research and industrial problem-solving in the 1970s and 80s, Cohen’s work brought AI into creative and cultural spaces, and to new audiences through gallery exhibitions, occasionally with AARON drawing live in the exhibition. The work sparked debates in artistic communities about creative agency and intention – questions that have been asked since artists first began using computers and which continue today across different creative applications of AI.

Paul Brown, Neighbourhood Count (1991)

Paul Brown’s generative art practice, where he designed a set of rules for a computer to make an artwork, drew on broader theories in AI research including artificial life and John Conway’s 1970s "Game of Life" in particular. Conway’s Game of Life is a zero-player game on a grid of black and white cells, where set conditions define cell behaviours and evolution depends on the initial state. Although Conway’s Game of Life is not considered AI, it is a form of Cellular Automata (CA), originally proposed by Hungarian-American mathematician and computer scientist John von Neumann in the 1940s to model the behaviour of complex systems by breaking them down into simple, discrete components. Von Neumann’s CA framework informed early AI research, particularly developments in artificial neural networks and decentralised decision-making, while the popularity of Conway’s Game of Life in the 1970s sparked questions about the emergence of life and intelligence from simple rules. When Brown discovered Conway’s Game of Life, several years after attending the 1968 exhibition Cybernetic Serendipity, the first comprehensive exhibition on art and technology, he found a way to pursue emergent complexity and systems-based thinking in his art.

Brown's 1991 work, Neighbourhood Count, comprises 17x17 matrices of eight cells. Resembling Conway’s Game of Life, each cell begins in one of two states (live or dead), and every cell interacts with its neighbouring cells. The states of each cell simultaneously influence the consequent state of their neighbours, for example, any live cell with fewer than two live neighbours dies from “underpopulation”. The piece depicts the resulting 256 possible neighbourhood states.

Like Harold Cohen, Brown’s engagement with AI-related concepts and investigations transforms these ideas into novel aesthetic forms, extending their reach far beyond the context of their original use and to new audiences. The works showcase the rich exchange of ideas between scientific and artistic communities.

Breakthrough moments: the battle of human vs machine intelligence

Through the mid- to late 20th century, ideas of humanoids and superintelligent machines persisted through science fiction films, including Bladerunner (1982) and The Terminator (1984). By contrast, a reduction in interest and funding of AI in this period meant less AI research was being conducted. Nevertheless, ongoing activity and new powerful computer hardware enabled breakthroughs in the 1990s that presented the prospect of superintelligent machines and refocused public attention on AI development.

One such landmark moment to capture the public imagination was IBM’s Deep Blue defeating chess champion Gary Kasparov in 1997 – a media spectacle broadcast live on massive screens to packed, tense audiences and streamed online over a then-fledgling Internet. Deep Blue was no ordinary computer of its time, but a supercomputer standing two metres tall, weighing 1.4 tonnes and evaluating 200 million chess positions per second. Its performance drew attention to the battle of human versus machine intelligence because the win was secured through an unexpected move that demonstrated conceptual subtlety. Where chess was long seen as an ideal test for machine intelligence, Deep Blue’s winning move signalled a future where AI could surpass human cognition and skill.

Eduardo Kac, Move 36 (2005)

Brazilian-American artist Eduardo Kac explores and captures the cultural interests and anxieties around Deep Blue’s breakthrough in his work Move 36, which refers to Deep Blue’s dramatic move. Kac’s original 2002/04 installation featured a chessboard of dark earth and white sand alongside a wall-projected video grid. This grid displayed animated video loops at different intervals, representing the vast number of possible chess moves. The sole piece on the chessboard was a genetically modified plant, engineered by Kac to convert philosopher Rene Descartes’ 17th-century principle “cogito, ergo sum” (I think, therefore I am) into genetic code (by converting each letter into binary code using ASCII values then into sequences of DNA elements). With this, Kac revisits and questions Descartes’ original argument that thinking proves conscious existence in a new age of intelligent “thinking” machines that threaten to destabilise these foundational human traits.

With the widespread media coverage of Deep Blue’s win, AI crossed a threshold into everyday life. Around the same time, AI entered the home through mass-market consumer products.

Everyday objects of AI

In the 1990s, the US-based company Dragon released the first speech recognition software for consumer PCs, bringing early natural language processing to mass-market use. A few years later, in 2002, American technology company iRobot released the Roomba, an autonomous vacuum cleaner that used simple pre-programmed behaviours, helping to realise longstanding dreams of domestic robots in popular culture.

Around the same time, the advent of the World Wide Web in the 1990s led to the availability of large-scale digital datasets. Alongside developments in powerful computer hardware, these advances enabled breakthroughs in machine learning (the use of algorithms to detect patterns in training datasets and make predictions and perform tasks based on that data) and deep learning (a more complex form of machine learning that uses artificial neural networks designed to mimic how the human brain learns). By the 2010s, machine learning research had further accelerated natural language processing techniques, powering conversational technology including Siri – first launched in 2010, and integrated into the Apple iPhone in 2011 – and smart home devices including the Amazon Echo.

Amazon Echo Smart Speaker (2014)

The Amazon Echo was first released in the US in 2014 and the UK in 2016 and connected to Amazon’s “intelligent” voice assistant, Alexa, which provided access to Amazon services, personal organisational functions, played music and provided news updates. The device used a microphone array to record the user’s voice command, which was processed through cloud-based machine learning technologies and responded to via an automated voice. In the V&A collection, the Echo represents the introduction of machine learning and always-on connected devices in the home, and the debates around data privacy, surveillance, and gender representation.

Artists react to AI’s integration in everyday life

The increasing integration of machine learning into everyday life has intensified debates over AI transparency and ethics. This has inspired investigations and critical questions from artists and designers whose practices document and illuminate AI processes and effects over time.

Kate Crawford and Vladan Joler, Anatomy of an AI (2018)

Kate Crawford and Vladan Joler’s critical design project, Anatomy of an AI System (2018), unearths the hidden technical and human infrastructure behind Amazon’s Alexa and the Echo device. The project challenged the idea that AI is purely digital and intangible by showing the extent of human labour, material resources and energy consumption needed to produce and power devices like the Echo. It also highlighted privacy debates by illustrating contact points between governments, corporations, and the private lives of Alexa users in the home.

Anna Ridler, Myriad (2019)

Anna Ridler is another artist and researcher who interrogates machine learning processes and the human labour and vast resources behind them. In 2019, Ridler manually produced and classified 10,000 photographs of tulips over a 3-month seasonal span in the Netherlands. This dataset forms Myriad. Presented as tiled photographic prints, Myriad returns the digital dataset to the physical realm. It highlights the vast scale of datasets used to train computer vision software – typically billions of images, compared to Ridler’s 10,000. The hand-written metadata tags on the photographs also expose the difficulties of classifying even “simple” flowers in discrete categories, such as colours, encouraging viewers to reflect on the subjective values embedded in human and machine classification.

Nye Thompson, Words that Remake the World (2019)

Nye Thompson also engages with machine learning processes to examine our ways of knowing the world through data. Nye Thompson’s Words that Remake the World (Visions of the Seeker) documents a three year “machine performance” undertaken by a prototype AI system, The Seeker, between 2016 and 2019. The Seeker existed on the Internet and “watched” the world through millions of compromised security camera feeds, analysing and naming things it saw. Evolving from Thompson’s longer interest in surveillance, The Seeker reimagines Ptah Seker, the Ancient Egyptian god who created the world by speaking the words to describe it.

Through the project, Thompson explores the relationship between images and language via the growing ability of machines to analyse, classify and define the world through visual input. The resulting print in the V&A collection maps the objects and concepts described by The Seeker, capturing a specific moment in the story of AI and the language of computer vision technologies.

AI image-making in the 21st century

The 2010s also saw AI imagery reach the general public through programmes such as Google’s DeepDream, released in 2015, which used convolutional neural networks (a type of deep learning algorithm, particularly effective for analysing visual imagery) to detect and enhance faces and patterns in images. Around the same time, artists started exploring the image-generating capabilities of Generative Adversarial Networks (GANs). Developed in 2014 by Ian Goodfellow and colleagues at the University of Montreal, GANs involve two neural networks, a generator and a discriminator trained on an image dataset. The generator creates new images while the discriminator evaluates whether these resemble those in the dataset. This process achieved remarkable progress towards the production of realistic synthetic images.

Jake Elwes, Zizi Show (2019-23)

Jake Elwes’ drag cabaret, The Zizi Show, uses GANs to explore queer representation, ownership and autonomy in datasets. Recognising that machine learning systems had trouble identifying trans, queer and other marginalised identities, Elwes highlights how AI systems perpetuate society’s heteronormative biases (the belief that heterosexuality is the norm). In a version commissioned by the V&A, Elwes collaborated with a community of drag artists to create photographic datasets that “corrupt” and correct absences in existing datasets. These were then used to generate consensual deepfake videos – reclaiming a technology that can otherwise be oppressive and exploitative – while highlighting queer erasure and examining the aesthetic possibilities of machine learning systems.

The early 2020s saw a shift in everyday AI use following breakthroughs in foundation models – large-scale, general-purpose AI systems that underpin large language models (those focused on understanding and generating natural language) and diffusion models (those used primarily for image generation). These enabled the public release of powerful generative AI applications, including chatbot ChatGPT, and text-to-image models Dall-E and Midjourney, which offered free, instant, and seemingly limitless generation of text and images from a simple text prompt, requiring little technical or creative expertise.

While AI use in online language translation, voice assistants, and recommendation algorithms in streaming services like Netflix have quickly become understood as mainstream computing, new generative applications such as Dall-E and ChatGPT marked a step change in consumer access to and command of advanced AI capabilities. Due to widespread public interest in these systems (ChatGPT reached 1 million users in its first five days), they have sparked heated debates over copyright, environmental impact, AI safety, machine sentience and existential risk.

The Economist Magazine, June 2022

In the early 2020s, AI was at the forefront of political debate, investment and media attention, and appeared on various magazine covers, including the June 2022 edition of The Economist Magazine, which features a cover image generated with the AI programme Midjourney. Issued just before the widespread public release of text-to-image applications, the peculiar new cover image and title announce a new era of AI. The articles inside also reflect curiosity and concern, prefiguring debates over AI’s technological possibilities, the nature of creativity, authorship, labour, and the future of work. In the months and years since their public release, generative AI capabilities have been increasingly integrated into existing consumer products and workflows. The magazine issue captures generative AI's arrival, and its cultural and technological disruption, when it is most visible.

The V&A holds many more objects and artworks that help us think about how we live and create with AI and other advanced technologies. Discover more through Explore the Collections and in the Prints and Drawings Study Room. For more on designed automation and the changing nature of work in the 20th and 21st centuries, visit the Design 1900 – Now Galleries at V&A South Kensington.